Nowadays, there are plenty of IoT devices which make our everyday life easier thanks to their intelligent tasks: data capture, process automation… However, the increase of these devices is turning out to be somehow risky in terms of latency or bandwidth. That is the reason why some alternatives that may solve these problems are being searched, and one of them is Edge Computing technologies.

Edge Computing devices are those who are able to process the information captured without connecting to the network. Due to that, the latency and bandwidth issues that may occur can be significantly reduced, allowing the radio spectrum to decrease its saturation as well as improving the latency and consumption performance.

In this project, the main goal is to create a system that is able to develop and execute Artificial Intelligence algorithms designed for autonomous driving and assistance to the driver, always taking care of Edge Computing philosophy. In order to do that, we have used Google Coral, a hardware platform that perfectly adapts to our needs, allowing us to develop all the Edge Computing algorithms as well as offering appropriate consumption and processing characteristics.

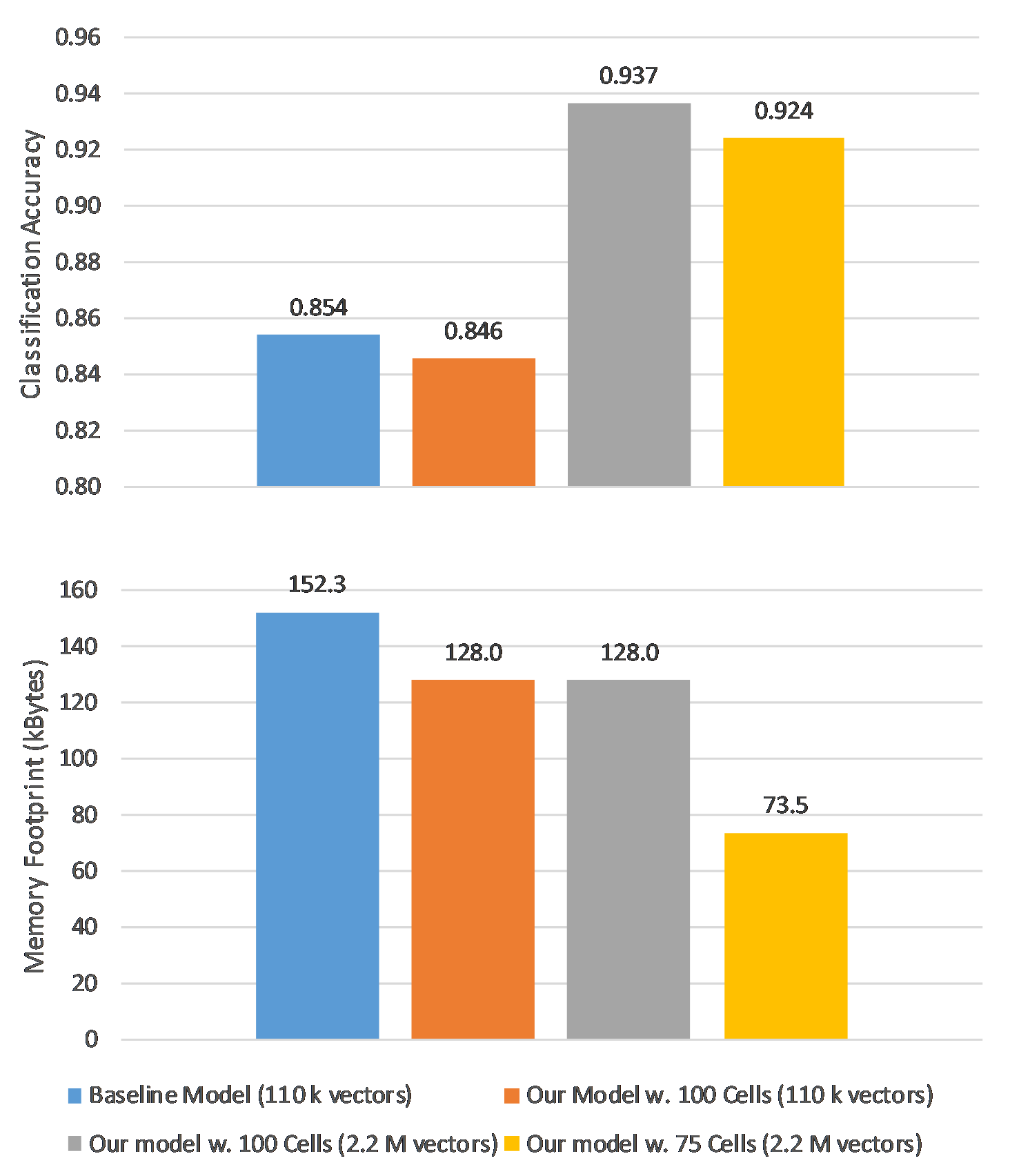

Finally, we have tested our system in a real situation, evaluating the quality of the results as well as the resources used (latency, bandwidth…) and the advantages and disadvantages in relation to the existing technologies is this area. After these experiments, we have concluded that the quality of our Edge Computing system is enough to carry out the tasks it has been designed for. Also, all the resources used have been optimized in relation to Cloud Computing alternatives, turning this project into a faster, more effcient and economic alternative.