Virtually Enhaced Senses (VES)

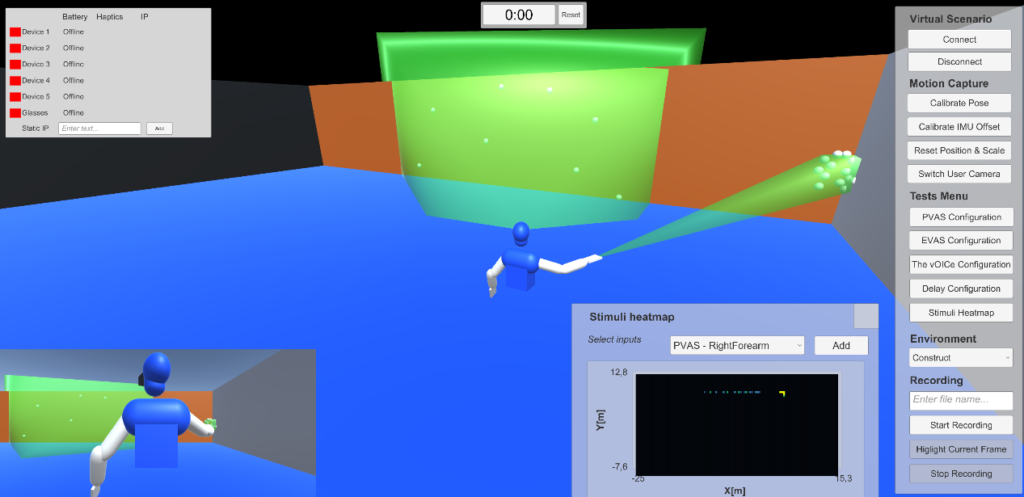

The Virtually Enhanced Senses system is a novel and highly configurable wireless sensor-actuator network conceived as a development and test-bench platform of navigation systems adapted for blind and visually impaired people. It allows to immerse its users into ‘walkable’ purely virtual or mixed environments with simulated sensors and validate navigation system designs prior to prototype development.

Herein, we will publish the source code as well as test results of end-user mobility and orientation performance with the simulated systems.

Brief introduction

Publications

- VES: A Mixed-Reality Development Platform of Navigation Systems for Blind and Visually Impaired (link): MoCap data

- VES: A Mixed-Reality System to Assist Multisensory Spatial Perception and Cognition for Blind and Visually Impaired People (link)

Demo

For testing purposes, three executables are included which allows to deploy a simplified version of VES (tutorial).

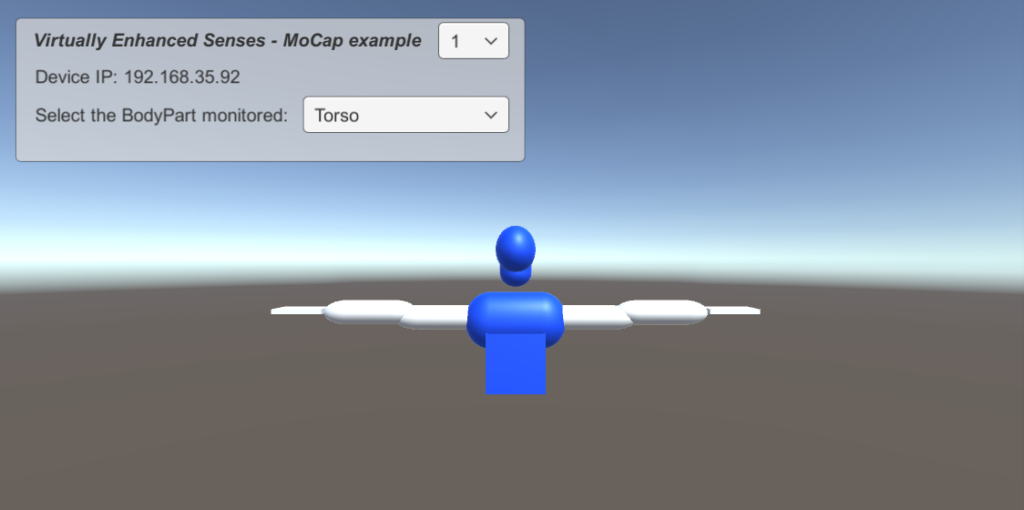

- VES – MoCap example (download): it turns an Android device into a wireless 3-DoF motion capture sensor.

- VES – Glasses (download): analogously, this application turns an ARCore-compatible device into a wireless 6-DoF motion capture system.

Source code

Access to our repository here.